Lighting Up Learning: a plan to put an AI teacher in Kharian

If you’re reading this, you probably don’t realise how lucky you are. Millions of people can’t even read their own names. What did you or I do to deserve our literacy, our laptops, our bottomless search bars? Nothing. We just happened to wake up one day in the families and postal codes we were given.

I never chose to be born in the UK to Pakistani immigrants, yet that single accident of birth changed everything. Whenever I visit relatives back in Pakistan—usually in Kharian these days—I’m treated like a budget-price celebrity because one British pound buys about 370 rupees. The exchange rate flatters me, but it also highlights the invisible wall that separates me from the kids chasing ten-rupee notes through dusty gutters while the wedding party climbs into air-conditioned cars.

I love Pakistan, but I hate how power is hoarded by dynasties and loudspeakers. In many villages critical thinking never gets taught, so a local cleric’s endorsement can swing an entire constituency. I still remember my Year-9 English teacher, Mr Kearns, explaining how two newspapers could describe the same event in opposite ways. That single lesson unlocked a lifetime habit of asking, who’s talking and why? I’m certain most children in rural Punjab never get that push. And not everyone can—or wants to—emigrate. So what can we do for the millions left behind by a threadbare school system and chronic load-shedding?

I think the only way out of the mess that Pakistan is in, is to give every child access to a quality education in order to raise a generation of critical thinkers that can demand better and who can push for reform. To fix the country, we must improve the education of children, particularly those that don’t have access to quality education.

How will we do that?

Meet the pocket-sized teacher

My answer is a bilingual AI tutor that can live on a second-hand computer and keep working when the power goes out. Large Language Models have already become my personal lawyer, doctor and ADHD wrangler; why not compress some of that magic into a form that will run on a beat-up Dell Optiplex/Lenovo ThinkCentre or a decade-old ThinkPad? No internet needed. Just a device (phone, laptop, tablet, whatever) that can connect to WIFI (note: this is different from needing internet! Many devices support offline WIFI connections)

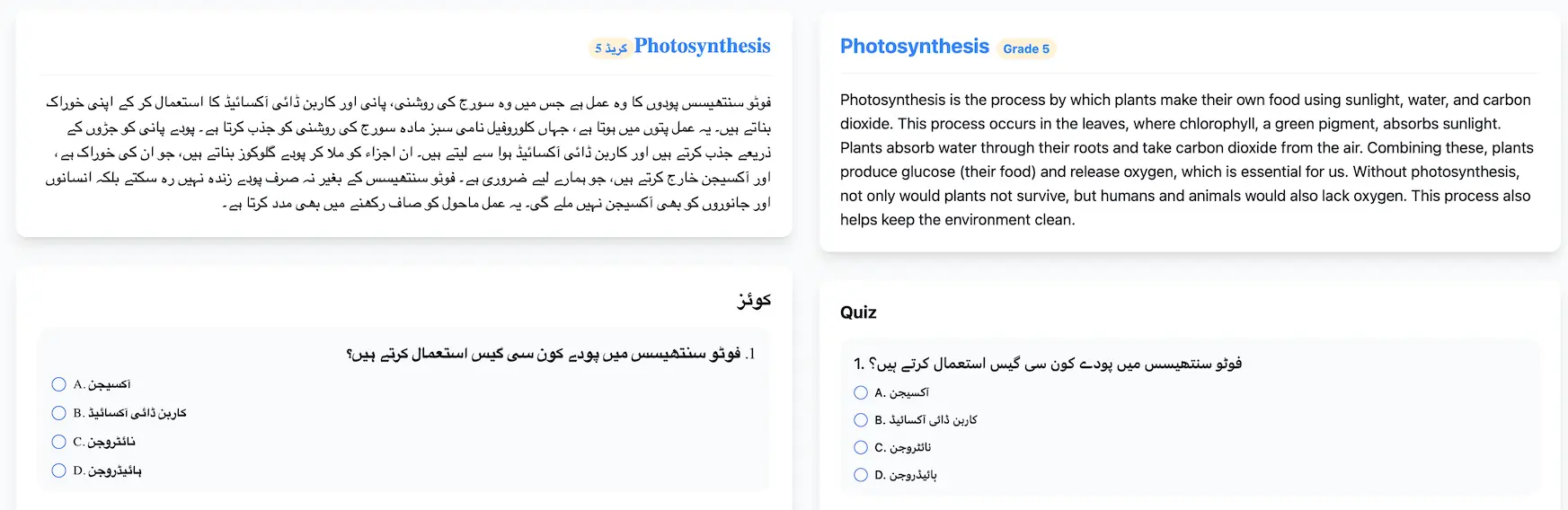

The LLM I’m using as the brain is Qwen-3 1.7B parameters - small enough to survive on a CPU once I quantise it to 4 bits, but big enough to juggle English and Urdu. I’m fine-tuning it so that every reply it gives is a neat little lesson package: an Urdu explanation (in Urdu Nastalik script), a matching English version for teachers, and a few multiple-choice questions, all wrapped in a single JSON file the front-end can display or print. One file, one lesson—no internet required. I’m thinking the inclusion of both Urdu and English counterparts should bolster the model’s bilingual capabilities too.

{

"topic": "Photosynthesis",

"grade": "5",

"explanation": "<250–350 words of Urdu script>",

"explanation_en": "<up to 250 words of English>",

"quiz": [

{ "question": "…", "options": ["A…","B…","C…","D…"] },

...

],

"answer_key": ["A", "B", "C"]

}

A mockup of how lesson content would display on the offline education system

How do you teach a small model big tricks?

A tiny model needs good mentors, so I use heavyweight “teacher” models like Gemini-2.5-Pro to create seed lessons and then i use smaller, faster models like Gemini-2.5-Flash to create an expanded dataset based on the polished seed examples. Unsloth keeps the fine-tuning light enough to run on Google Colab; the first batch of data cost me less than ten dollars. If the results look solid I’ll spin up a longer run on RunPod and maybe jump to Qwen’s 4B version, but i’ll have to do some testing on how that would run on a CPU.

Shrinking Wikipedia to bite-size

Small models choke on long articles, so I’m implementing a simple Retrieval-Augmented Generation (RAG) layer. Feed the system a Wikipedia page about, say, photosynthesis; it slices the text into bite-size chunks, keeps only the useful bits, and passes those to the model. Training the model on chunks from day one teaches it to think in pieces instead of drowning in walls of text. This way we can feed in very long articles and the model will only pay attention to the most relevant bits. This is important because the small 1.7B model won’t be able to handle long articles.

Experiment: I’m testing if letting the system write its own questions first (“What does the leaf do?”) and then fishing for answers produces better lessons. It’s slower but should generate more focused content. Still debating whether this step belongs in training or only at inference time.

Fighting load-shedding with sunshine

None of this matters if the machine dies every time the grid does, something that’s a regular occurance around Kharian, so i’m thinking for long term deployments out in the sticks, we’ll need something like a 100Ah LiFePO₄ battery paired with a cheap 300-500W solar panel. Perhaps we forgo the PCs and go for laptops; a used ThinkPad has its own battery, which means one less controller board to fry in midsummer heat. Parts, so far, sit around £80 for the computer and another ~£250 for the battery + solar kit (including charging controller). The solar kit is by far the most expensive part of the project, and is something i will be looking to optimise if anyone has any suggestions. For now, i will be deploying the machines without a solar backing.

What happens next?

The first classroom trial will involve 1 ThinkCentre PC with an i5 7500T along with 8GB RAM, my fine tuned tiny model, 512GB of offline educational content like Wikipedia, TED Talks, Khan Academy and more via Internet in a Box, and a simple app that ties it all together. The app will let teachers and kids paste in articles, select a grade level, and get a lesson, some questions, and a PDF to print out. The app will also let teachers grade the questions and save the results.

If the little AI survives the Pakistani summer, I’ll open-source the whole stack: datasets, training scripts, the front-end etc. Break it, fork it, translate it - carry it on. Whatever pushes the idea further and helps more children learn.

I’m rusty at writing, nevermind blogging, but I’ll keep posting progress here: the triumphs, the learnings, the inevitable failures. If you’ve got suggestions, critiques, or a spare stick of DDR4 that deserves a better life, my inbox is open - [email protected].

I may be only 1 person on this project, but I’m not alone. Along with me are countless children, teachers, and parents who are determined to give their children a better future.

This 1 person will start the revolution in that 1 dusty room, under that 1 bulb with that 1 computer. The orphans and the destitute, once left in darkness, will go on to light up the world.

“Let there be light.”