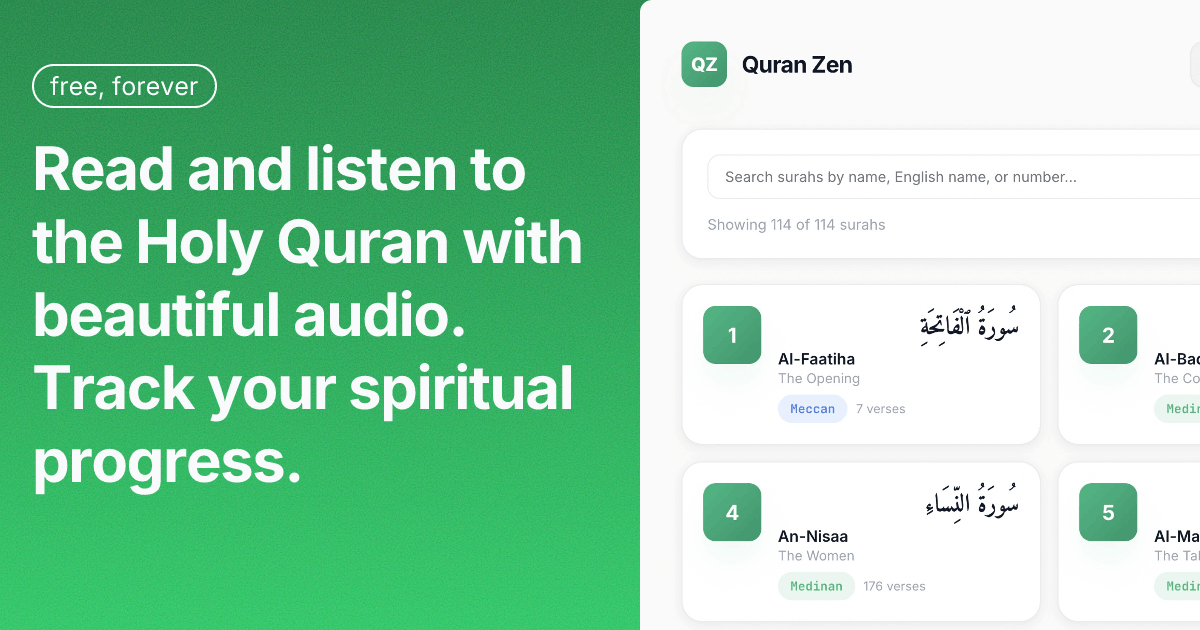

I wanted a clean way to read and listen to the Quran with multiple translations—without the bloated feel of existing apps. So I built QuranZen, which is now live at quranzen.com.

The core idea is straightforward: keep the static Quran data local (SQLite), put user data in the cloud (Supabase).

Why split the databases?

Most apps dump everything into one database. I split it based on how the data actually gets used:

- 6,236 ayahs across 114 surahs

- 20+ translations (English, Urdu, etc.)

- Audio metadata for different reciters

- Lives on the server, loads instantly

- Authentication

- Bookmarks, reading progress

- Row Level Security keeps users separated

- Syncs across devices

Quran data doesn't need to be in the cloud—it's reference material. SQLite means instant loads with no network overhead. User data does need cloud sync and auth, so Supabase handles that.

The stack

Frontend is React 19 with Vite 7 and React Router v7. I used plain CSS instead of a component library—less to download, fewer abstractions to fight against. Framer Motion handles the animations.

Backend is FastAPI. Quran endpoints hit SQLite (no auth required). User endpoints verify JWTs and talk to Supabase. Rate limiting comes from slowapi.

How it works

Reading works like this: fetch the surah list, pick one, get the ayahs. The Quran data gets cached in the browser, so subsequent reads are instant. You can view multiple translations side-by-side.

For audio, you pick a reciter and the app streams MP3s directly from the backend. No processing overhead, just static files. The app tracks what you've listened to in Supabase if you're logged in.

Authentication is standard Supabase stuff. Log in, get a JWT, store it in localStorage, and include it with API requests. Row Level Security at the database level makes sure users can only see their own data.

There's also an Islamic Events module that scrapes Wikipedia, uses NVIDIA NIM to extract structured event data, and stores it locally for querying by date or category.

Deployment

Frontend builds to static files and serves from Cloudflare Pages. Backend runs on FastAPI behind a Cloudflare Tunnel. Audio files get served directly from the backend as static content.

Things I'd change

No tests yet. I'd add pytest for the backend and Vitest for the frontend. A CDN for audio would help global distribution. Redis caching probably isn't necessary—SQLite is already fast enough. I'd add PWA support for offline reading.

The code is open source. Frontend, backend, events scraper, Docker setup, everything's in the repo.

If you're building an app with large static content plus small dynamic user data, this hybrid approach works well. SQLite handles the heavy reads, Supabase handles the writes and auth.